publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2025

- CHI ’25

Documents in Your Hands: Exploring Interaction Techniques for Spatial Arrangement of Augmented Reality DocumentsWeizhou Luo, Mats Ole Ellenberg, Marc Satkowski, and 1 more authorIn Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, Apr 2025

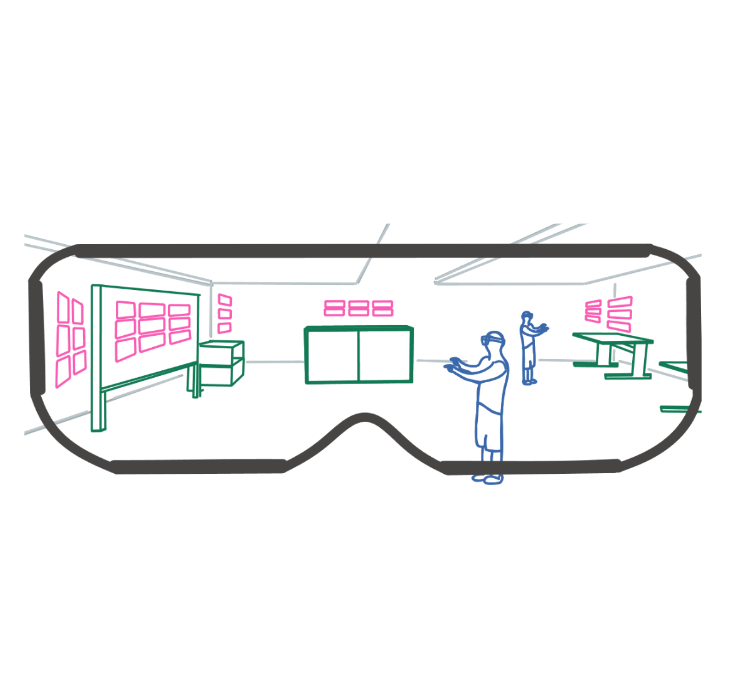

Documents in Your Hands: Exploring Interaction Techniques for Spatial Arrangement of Augmented Reality DocumentsWeizhou Luo, Mats Ole Ellenberg, Marc Satkowski, and 1 more authorIn Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, Apr 2025Augmented Reality (AR) promises to enhance daily office activities involving numerous textual documents, slides, and spreadsheets by expanding workspaces and enabling more direct interaction. However, there is a lack of systematic understanding of how knowledge workers can manage multiple documents and organize, explore, and compare them in AR environments. Therefore, we conducted a user-centered design study (N = 21) using predefined spatial document layouts in AR to elicit interaction techniques, resulting in 790 observation notes. Thematic analysis identified various interaction methods for aggregating, distributing, transforming, inspecting, and navigating document collections. Based on these findings, we propose a design space and distill design implications for AR document arrangement systems, such as enabling body-anchored storage, facilitating layout spreading and compressing, and designing interactions for layout transformation. To demonstrate their usage, we developed a rapid prototyping system and exemplify three envisioned scenarios. With this, we aim to inspire the design of future immersive offices.

@inproceedings{luo2025documents, author = {Luo, Weizhou and Ellenberg, Mats Ole and Satkowski, Marc and Dachselt, Raimund}, title = {Documents in Your Hands: Exploring Interaction Techniques for Spatial Arrangement of Augmented Reality Documents}, booktitle = {Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems}, series = {CHI '25}, year = {2025}, month = apr, isbn = {979-8-4007-1394-1/25/04}, location = {Yokohama, Japan}, numpages = {22}, doi = {10.1145/3706598.3713518}, url = {https://doi.org/10.1145/3706598.3713518}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, keywords = {spatial layout, content organization, interaction design, user-centered design, Mixed Reality} } - CHI ’25 Workshop

Of Affordance Opportunism in AR: Its Fallacies and Discussing Ways ForwardMarc Satkowski, Weizhou Luo, and Rufat RzayevIn CHI’25 Workshop: Beyond Glasses – Future Directions for XR Interactions within the Physical World, Yokohama, Japan, Apr 2025

Of Affordance Opportunism in AR: Its Fallacies and Discussing Ways ForwardMarc Satkowski, Weizhou Luo, and Rufat RzayevIn CHI’25 Workshop: Beyond Glasses – Future Directions for XR Interactions within the Physical World, Yokohama, Japan, Apr 2025This position paper addresses the fallacies associated with the im- proper use of affordances in the opportunistic design of augmented reality (AR) applications. While opportunistic design leverages existing physical affordances for content placement and for creat- ing tangible feedback in AR environments, their misuse can lead to confusion, errors, and poor user experiences. The paper em- phasizes the importance of perceptible affordances and properly mapping virtual controls to appropriate physical features in AR applications by critically reflecting on four fallacies of facilitating affordances, namely, the subjectiveness of affordances, affordance imposition and reappropriation, properties and dynamicity of en- vironments, and mimicking the real world. By highlighting these potential pitfalls and proposing a possible path forward, we aim to raise awareness and encourage more deliberate and thoughtful use of affordances in the design of AR applications.

@inproceedings{satkowski2025affordance, author = {Satkowski, Marc and Luo, Weizhou and Rzayev, Rufat}, title = {Of Affordance Opportunism in AR: Its Fallacies and Discussing Ways Forward}, booktitle = {CHI’25 Workshop: Beyond Glasses – Future Directions for XR Interactions within the Physical World}, series = {ACM CHI'25}, year = {2025}, month = apr, location = {Yokohama, Japan}, numpages = {5}, doi = {10.48550/arXiv.2503.20406} } - TVCG ’25

Immersive Data-Driven Storytelling: Scoping an Emerging Field Through the Lenses of Research, Journalism, and GamesJulián Méndez, Weizhou Luo, Rufat Rzayev, and 2 more authorsIEEE Transactions on Visualization and Computer Graphics, Jan 2025

Immersive Data-Driven Storytelling: Scoping an Emerging Field Through the Lenses of Research, Journalism, and GamesJulián Méndez, Weizhou Luo, Rufat Rzayev, and 2 more authorsIEEE Transactions on Visualization and Computer Graphics, Jan 2025In recent years, data-driven stories have found a firm footing in journalism, business, and education. They leverage visualization and storytelling to convey information to broader audiences. Likewise, immersive technologies, like augmented and virtual reality devices, provide excellent potential for exploring and explaining data, thus inviting research on how data-driven storytelling transfers to immersive environments. To gain a better understanding of this exciting novel research area, we conducted a scoping review on the emerging notion of immersive data-driven storytelling, extended by surveying immersive data journalism and by analyzing immersive games, selected based on community reviews and tags. We present our methodology for the survey and discuss prominent themes that coalesce the knowledge we extracted from the literature, journalism, and games. These themes include, among others, the spatial embodiment of narration, the incorporation of the users and their context into narratives, and the balance between guiding the user versus promoting serendipity. Our discussion of these themes reveals research opportunities and challenges that will inform the design of immersive data-driven stories in the future.

@article{MLRBD-2025-ImmDataStoriesReview, author = {M\'{e}ndez, Juli\'{a}n and Luo, Weizhou and Rzayev, Rufat and B\"{u}schel, Wolfgang and Dachselt, Raimund}, title = {Immersive Data-Driven Storytelling: Scoping an Emerging Field Through the Lenses of Research, Journalism, and Games}, journal = {IEEE Transactions on Visualization and Computer Graphics}, year = {2025}, month = jan, doi = {10.1109/TVCG.2025.3531138}, publisher = {IEEE}, address = {New Jersey} }

2024

- ISMAR-Adj ’24

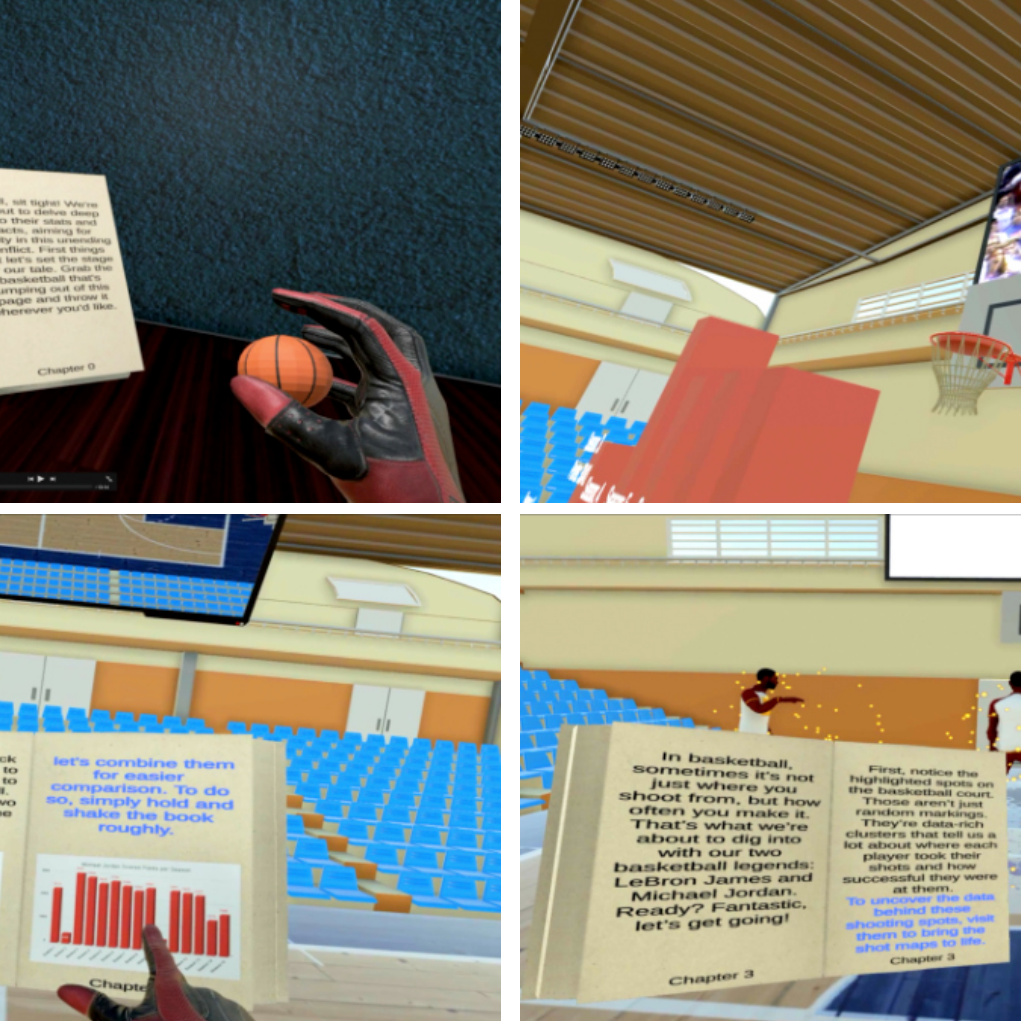

Between Pages and Reality: Exploring a Book Metaphor for Unfolding Data-driven Storytelling in Immersive EnvironmentsWeizhou Luo, Mark Abdelaziz, Julián Méndez, and 1 more authorIn Proceedings of 2024 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Seattle, Oct 2024

Between Pages and Reality: Exploring a Book Metaphor for Unfolding Data-driven Storytelling in Immersive EnvironmentsWeizhou Luo, Mark Abdelaziz, Julián Méndez, and 1 more authorIn Proceedings of 2024 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Seattle, Oct 2024Storytelling is an effective way to communicate information to peo- ple with different backgrounds and literacy. Immersive technolo- gies like virtual reality (VR) have great potential for presenting en- gaging narratives and multimodal content in innovative ways. How- ever, it is essential to ensure that the presentation and interaction possibilities are comprehensible to broad audiences. Drawing in- spiration from the traditional role of books in preserving stories, we introduce StoryBook, a conceptual metaphor for interacting with and unfolding immersive data stories. Through analogical reason- ing, we mapped existing concepts of physical books to interact with a VR storytelling process. StoryBook provides a familiar and intu- itive way of navigating, inserting, and extracting story elements in VR, akin to page-flipping, bookmarking, or note-taking with a real book. We developed a prototype and conducted initial user feed- back sessions, showcasing the potential of our concepts. Our con- tribution provides insights into the design and interaction of data- driven stories in immersive environments.

@inproceedings{luo2024storybook, author = {Luo, Weizhou and Abdelaziz, Mark and M\'{e}ndez, Juli\'{a}n and Rzayev, Rufat}, title = {Between Pages and Reality: Exploring a Book Metaphor for Unfolding Data-driven Storytelling in Immersive Environments}, booktitle = {Proceedings of 2024 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)}, series = {ISMAR '24}, year = {2024}, month = oct, location = {Seattle}, doi = {10.1109/ISMAR-Adjunct64951.2024.00077}, publisher = {IEEE} } - CHI ’24 Workshop

Don’t Leave Me Out: Designing for Device Inclusivity in Mixed Reality CollaborationKatja Krug, Julián Méndez, Weizhou Luo, and 1 more authorIn CHI 2024 Workshop on Designing Inclusive Future Augmented Realities, Honolulu, HI, USA, May 2024

Don’t Leave Me Out: Designing for Device Inclusivity in Mixed Reality CollaborationKatja Krug, Julián Méndez, Weizhou Luo, and 1 more authorIn CHI 2024 Workshop on Designing Inclusive Future Augmented Realities, Honolulu, HI, USA, May 2024@inproceedings{krug2024inclusivemr, author = {Krug, Katja and M\'{e}ndez, Juli\'{a}n and Luo, Weizhou and Dachselt, Raimund}, title = {Don't Leave Me Out: Designing for Device Inclusivity in Mixed Reality Collaboration}, booktitle = {CHI 2024 Workshop on Designing Inclusive Future Augmented Realities}, year = {2024}, month = may, location = {Honolulu, HI, USA}, doi = {10.48550/arXiv.2409.05374} } - CHI EA ’24

Exploring Spatial Organization Strategies for Virtual Content in Mixed Reality EnvironmentsWeizhou LuoIn Extended Abstracts of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, May 2024

Exploring Spatial Organization Strategies for Virtual Content in Mixed Reality EnvironmentsWeizhou LuoIn Extended Abstracts of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, May 2024Our future will likely be reshaped by Mixed Reality (MR) offering boundless display space while preserving the context of real-world surroundings. However, to fully leverage the spatial capabilities of MR technology, a better understanding of how and where to place virtual content like documents is required, particularly considering the situated context. I aim to explore spatial organization strategies for virtual content in MR environments. For that, we conducted empirical studies investigating users’ strategies for document layout and placement and examined two real-world factors: physical environments and people present. With this knowledge, we proposed a mixed-reality approach for the in-situ exploration and analysis of human movement data utilizing physical objects in the original space as referents. My next steps include exploring arrangement strategies, designing techniques empowering spatial organization, and extending understandings for multi-user scenarios. My dissertation will enrich the immersive interface repertoire and contribute to the design of future MR systems.

@inproceedings{luo2024exploring, author = {Luo, Weizhou}, title = {Exploring Spatial Organization Strategies for Virtual Content in Mixed Reality Environments}, booktitle = {Extended Abstracts of the 2024 CHI Conference on Human Factors in Computing Systems}, series = {CHI EA '24}, year = {2024}, month = may, location = {Honolulu, HI, USA}, numpages = {6}, doi = {https://doi.org/10.1145/3613905.3638181}, publisher = {ACM}, address = {New York, NY, USA}, keywords = {Spatiality, spatial layout, content organization, affordance, Aug- mented Reality, Mixed Reality} }

2023

- CHI ’23

PEARL: Physical Environment based Augmented Reality Lenses for In-Situ Human Movement AnalysisWeizhou Luo, Zhongyuan Yu, Rufat Rzayev, and 4 more authorsIn Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, May 2023

PEARL: Physical Environment based Augmented Reality Lenses for In-Situ Human Movement AnalysisWeizhou Luo, Zhongyuan Yu, Rufat Rzayev, and 4 more authorsIn Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, May 2023This paper presents Pearl, a mixed-reality approach for the analysis of human movement data in situ. As the physical environment shapes human motion and behavior, the analysis of such motion can benefit from the direct inclusion of the environment in the analytical process. We present methods for exploring movement data in relation to surrounding regions of interest, such as objects, furniture, and architectural elements. We introduce concepts for selecting and filtering data through direct interaction with the environment, and a suite of visualizations for revealing aggregated and emergent spatial and temporal relations. More sophisticated analysis is supported through complex queries comprising multiple regions of interest. To illustrate the potential of Pearl, we developed an Augmented Reality-based prototype and conducted expert review sessions and scenario walkthroughs in a simulated exhibition. Our contribution lays the foundation for leveraging the physical environment in the in-situ analysis of movement data.

@inproceedings{luo2023pearl, author = {Luo, Weizhou and Yu, Zhongyuan and Rzayev, Rufat and Satkowski, Marc and Gumhold, Stefan and McGinity, Matthew and Dachselt, Raimund}, title = {PEARL: Physical Environment based Augmented Reality Lenses for In-Situ Human Movement Analysis}, year = {2023}, isbn = {9781450394215}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3544548.3580715}, doi = {10.1145/3544548.3580715}, booktitle = {Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems}, articleno = {381}, numpages = {15}, keywords = {physical referents, movement data analysis, augmented/mixed reality, affordance, In-situ visualization, Immersive Analytics}, location = {Hamburg, Germany}, series = {CHI '23} } - CHI EA ’23

Spatiality and Semantics - Towards Understanding Content Placement in Mixed RealityMats Ole Ellenberg, Marc Satkowski, Weizhou Luo, and 1 more authorIn Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems,

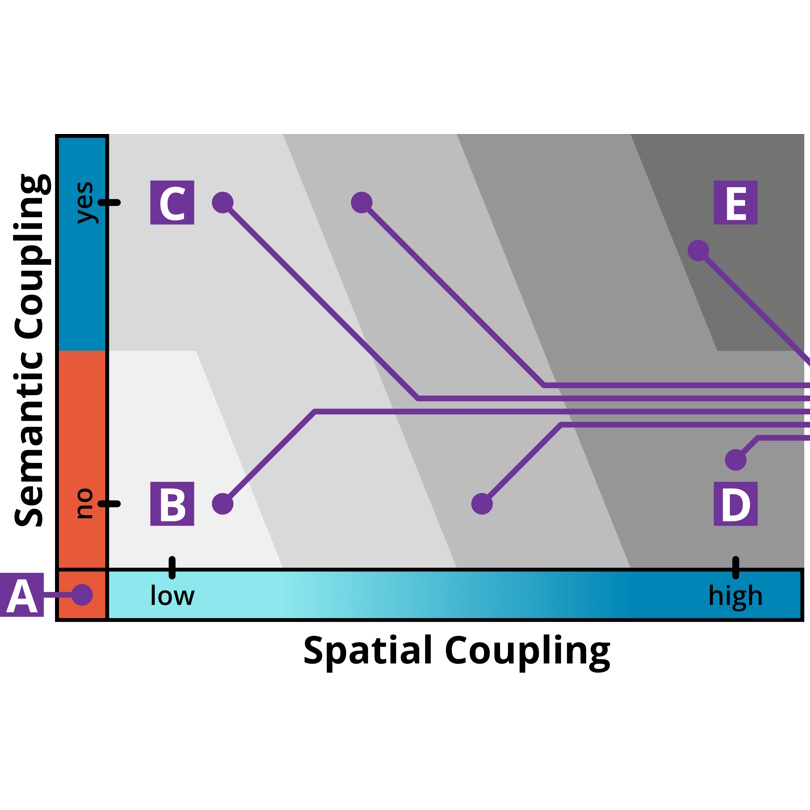

Spatiality and Semantics - Towards Understanding Content Placement in Mixed RealityMats Ole Ellenberg, Marc Satkowski, Weizhou Luo, and 1 more authorIn Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems,, , May 2023Hamburg ,Germany ,Mixed Reality (MR) popularizes numerous situated applications where virtual content is spatially integrated into our physical environment. However, we only know little about what properties of an environment influence the way how people place digital content and perceive the resulting layout. We thus conducted a preliminary study (N = 8) examining how physical surfaces affect organizing virtual content like documents or charts, focusing on user perception and experience. We found, among others, that the situated layout of virtual content in its environment can be characterized by the level of spatial as well as semantic coupling. Consequently, we propose a two-dimensional design space to establish the vocabularies and detail their parameters for content organization. With our work, we aim to facilitate communication between designers or researchers, inform general MR interface design, and provide a first step towards future MR workspaces empowered by blending digital content and its real-world context.

@inproceedings{ellenberg2023spatiality, author = {Ellenberg, Mats Ole and Satkowski, Marc and Luo, Weizhou and Dachselt, Raimund}, title = {Spatiality and Semantics - Towards Understanding Content Placement in Mixed Reality}, year = {2023}, isbn = {9781450394222}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3544549.3585853}, doi = {10.1145/3544549.3585853}, booktitle = {Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems}, articleno = {254}, numpages = {8}, keywords = {Augemented Reality, Content Organization, Design Space, Layout, Mixed Reality, User Study}, location = {<conf-loc>, <city>Hamburg</city>, <country>Germany</country>, </conf-loc>}, series = {CHIEA '23} }

2022

- CHI ’22

Where Should We Put It? Layout and Placement Strategies of Documents in Augmented Reality for Collaborative SensemakingWeizhou Luo, Anke Lehmann, Hjalmar Widengren, and 1 more authorIn Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, May 2022

Where Should We Put It? Layout and Placement Strategies of Documents in Augmented Reality for Collaborative SensemakingWeizhou Luo, Anke Lehmann, Hjalmar Widengren, and 1 more authorIn Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, May 2022Future offices are likely reshaped by Augmented Reality (AR) extending the display space while maintaining awareness of surroundings, and thus promise to support collaborative tasks such as brainstorming or sensemaking. However, it is unclear how physical surroundings and co-located collaboration influence the spatial organization of virtual content for sensemaking. Therefore, we conducted a study (N=28) to investigate the effect of office environments and work styles during a document classification task using AR with regard to content placement, layout strategies, and sensemaking workflows. Results show that participants require furniture, especially tables and whiteboards, to assist sensemaking and collaboration regardless of room settings, while generous free spaces (e.g., walls) are likely used when available. Moreover, collaborating participants tend to use furniture despite personal layout preferences. We identified different placement and layout strategies, as well as the transitions in-between. Finally, we propose design implications for future immersive sensemaking applications and beyond.

@inproceedings{luo2022where, author = {Luo, Weizhou and Lehmann, Anke and Widengren, Hjalmar and Dachselt, Raimund}, title = {Where Should We Put It? Layout and Placement Strategies of Documents in Augmented Reality for Collaborative Sensemaking}, year = {2022}, isbn = {9781450391573}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3491102.3501946}, doi = {10.1145/3491102.3501946}, booktitle = {Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems}, articleno = {627}, numpages = {16}, keywords = {spatiality, spatial layout, sensemaking, qualitative user study, content organization, collaborative sensemaking, affordance, Mixed Reality, Augmented Reality}, location = {New Orleans, LA, USA}, series = {CHI '22} }

2021

- CHI EA ’21

Investigating Document Layout and Placement Strategies for Collaborative Sensemaking in Augmented RealityWeizhou Luo, Anke Lehmann, Yushan Yang, and 1 more authorIn Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems,

Investigating Document Layout and Placement Strategies for Collaborative Sensemaking in Augmented RealityWeizhou Luo, Anke Lehmann, Yushan Yang, and 1 more authorIn Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems,, , May 2021Yokohama ,Japan ,Augmented Reality (AR) has the potential to revolutionize our workspaces, since it considerably extends the limits of current displays while keeping users aware of their collaborators and surroundings. Collective activities like brainstorming and sensemaking often use space for arranging documents and information and thus will likely benefit from AR-enhanced offices. Until now, there has been very little research on how the physical surroundings might affect virtual content placement for collaborative sensemaking. We therefore conducted an initial study with eight participants in which we compared two different room settings for collaborative image categorization regarding content placement, spatiality, and layout. We found that participants tend to utilize the room’s vertical surfaces as well as the room’s furniture, particularly through edges and gaps, for placement and organization. We also identified three different spatial layout patterns (panoramic-strip, semi-cylindrical layout, furniture-based distribution) and observed the usage of temporary storage spaces specifically for collaboration.

@inproceedings{luo2021investigating, author = {Luo, Weizhou and Lehmann, Anke and Yang, Yushan and Dachselt, Raimund}, title = {Investigating Document Layout and Placement Strategies for Collaborative Sensemaking in Augmented Reality}, year = {2021}, isbn = {9781450380959}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3411763.3451588}, doi = {10.1145/3411763.3451588}, booktitle = {Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems}, articleno = {456}, numpages = {7}, keywords = {content organization, grouping, physical environment, sensemaking, spatiality, user study}, location = {<conf-loc>, <city>Yokohama</city>, <country>Japan</country>, </conf-loc>}, series = {CHI EA '21} } - ISMAR-Adj ’21

Exploring and Slicing Volumetric Medical Data in Augmented Reality Using a Spatially-Aware Mobile Device [🏅Best Poster] [🏅Demo Honorable Mention]Weizhou Luo, Eva Goebel, Patrick Reipschläger, and 2 more authorsIn 2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), May 2021

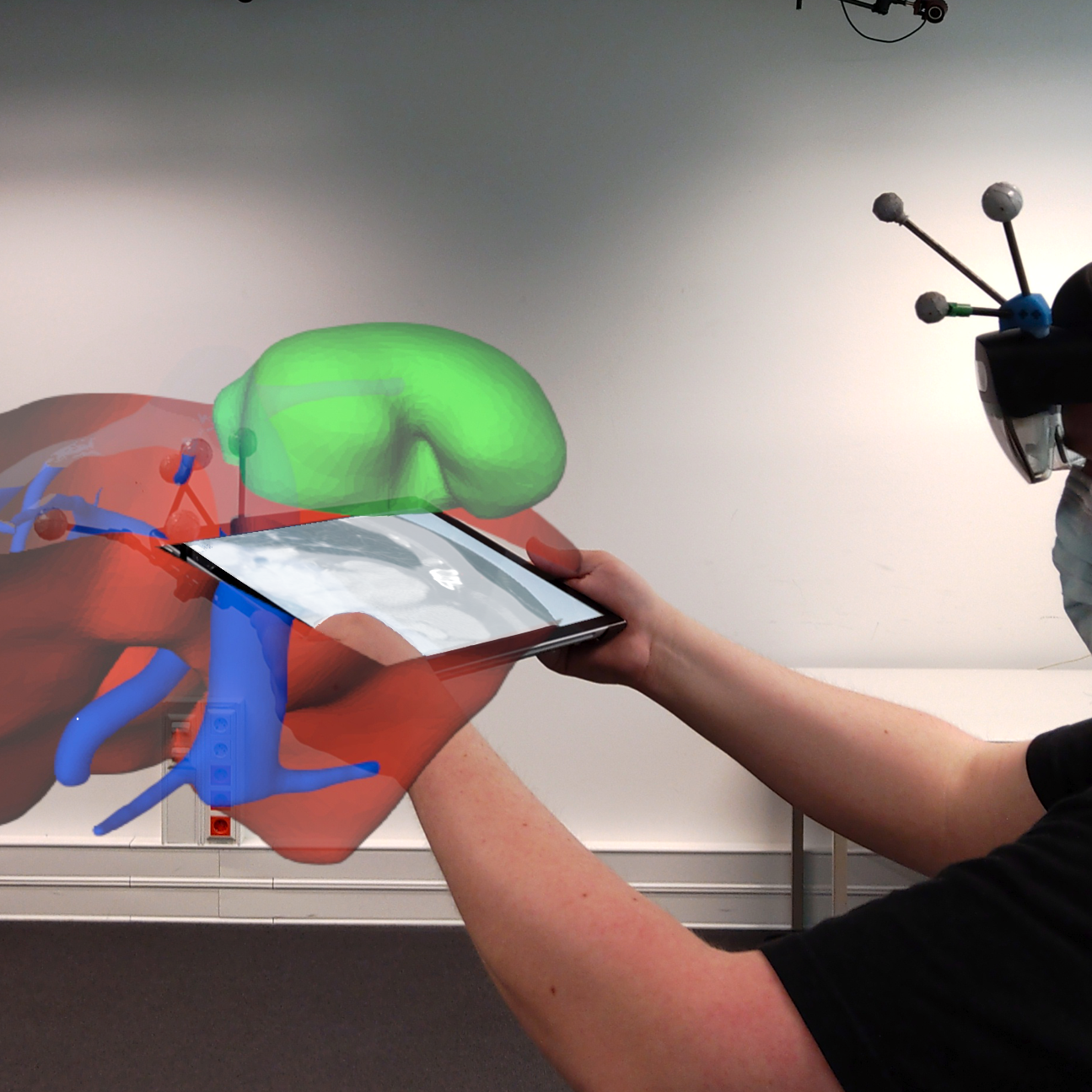

Exploring and Slicing Volumetric Medical Data in Augmented Reality Using a Spatially-Aware Mobile Device [🏅Best Poster] [🏅Demo Honorable Mention]Weizhou Luo, Eva Goebel, Patrick Reipschläger, and 2 more authorsIn 2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), May 2021We present a concept and early prototype for exploring volumetric medical data, e.g., from MRI or CT scans, in head-mounted Augmented Reality (AR) with a spatially tracked tablet. Our goal is to address the lack of immersion and intuitive input of conventional systems by providing spatial navigation to extract arbitrary slices from volumetric data directly in three-dimensional space. A 3D model of the medical data is displayed in the real environment, fixed to a particular location, using AR. The tablet is spatially moved through this virtual 3D model and shows the resulting slices as 2D images. We present several techniques that facilitate this overall concept, e.g., to place and explore the model, as well as to capture, annotate, and compare slices of the data. Furthermore, we implemented a proof-of-concept prototype that demonstrates the feasibility of our concepts. With our work we want to improve the current way of working with volumetric data slices in the medical domain and beyond.

@inproceedings{luo2021exploring, author = {Luo, Weizhou and Goebel, Eva and Reipschläger, Patrick and Ellenberg, Mats Ole and Dachselt, Raimund}, booktitle = {2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)}, title = {Exploring and Slicing Volumetric Medical Data in Augmented Reality Using a Spatially-Aware Mobile Device [🏅Best Poster] [🏅Demo Honorable Mention] }, year = {2021}, volume = {}, number = {}, pages = {334-339}, keywords = {Solid modeling;Three-dimensional displays;Navigation;Magnetic resonance imaging;Prototypes;Mobile handsets;Data models;Human-centered computing;Mixed/Augmented Reality;Visualization}, doi = {10.1109/ISMAR-Adjunct54149.2021.00076} } - ISMAR-Adj ’21

Towards In-situ Authoring of AR Visualizations with Mobile DevicesMarc Satkowski, Weizhou Luo, and Raimund DachseltIn 2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Oct 2021

Towards In-situ Authoring of AR Visualizations with Mobile DevicesMarc Satkowski, Weizhou Luo, and Raimund DachseltIn 2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Oct 2021Augmented Reality (AR) has been shown to enhance the data visualization and analysis process by supporting users in their immersive exploration of data in a real-world context. However, authoring such visualizations still heavily relies on traditional, stationary desktop setups, which inevitably separates users from the actual working space. To better support the authoring process in immersive environments, we propose the integration of spatially-aware mobile devices. Such devices also enable precise touch interaction for data configuration while lowering the entry barriers of novel immersive technologies. We therefore contribute an initial set of concepts within a scenario for authoring AR visualizations. We implemented an early prototype for configuring visualizations in-situ on the mobile device without programming and report our first impressions.

@inproceedings{mac2021towards, author = {Satkowski, Marc and Luo, Weizhou and Dachselt, Raimund}, booktitle = {2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)}, title = {Towards In-situ Authoring of AR Visualizations with Mobile Devices}, year = {2021}, volume = {}, number = {}, pages = {324-325}, keywords = {Data visualization;Prototypes;Mobile handsets;Augmented reality;Space stations;Human-centered computing;Mixed/Augmented Reality;Visualization}, doi = {10.1109/ISMAR-Adjunct54149.2021.00073}, issn = {}, month = oct } - VIS Poster ’21

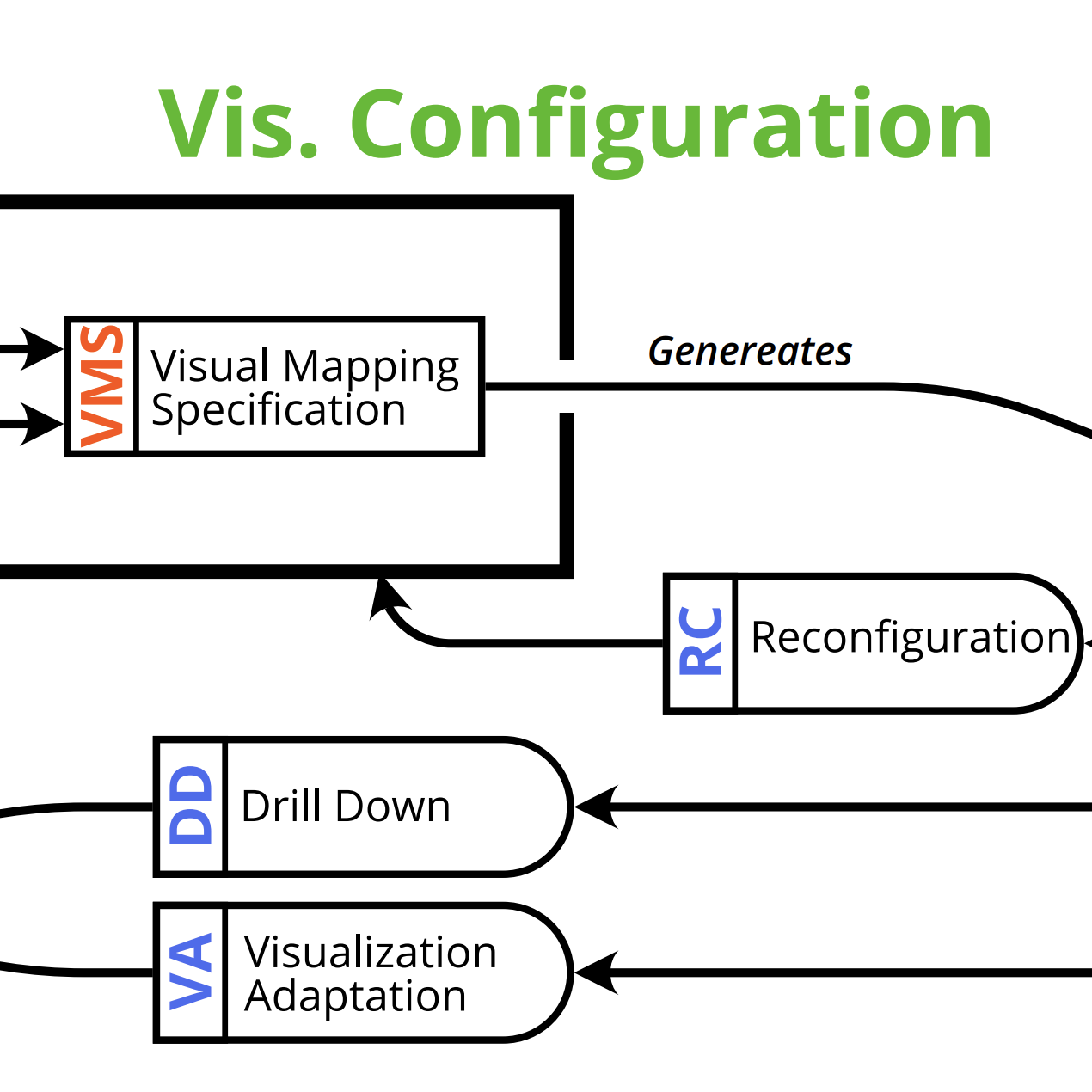

A Visualization Authoring Model for Post-WIMP InterfacesMarc Satkowski, Weizhou Luo, and Raimund DachseltIn Poster Program of the 2021 IEEE VIS Conference, Virtual Event, Oct 2021

A Visualization Authoring Model for Post-WIMP InterfacesMarc Satkowski, Weizhou Luo, and Raimund DachseltIn Poster Program of the 2021 IEEE VIS Conference, Virtual Event, Oct 2021Besides the ability to utilize visualizations, the process of creating and authoring them is of equal importance. However, for visualization environments beyond the desktop, like multi-display or immersive analytics environments, this process is often decoupled from the place where the visualization is actually used. This separation makes it hard for authors, developers, or users of such systems to understand, what consequences different choices they made will have for the created visualizations. We present an extended visualization authoring model for Post-WIMP interfaces, which support designers by a more seamless approach of developing and utilizing visualizations. With it, our emphasis is on the iterative nature of creating and configuring visualizations, the existence of multiple views in the same system, and requirements for the data analysis process.

@inproceedings{satkowski2021visualization, author = {Satkowski, Marc and Luo, Weizhou and Dachselt, Raimund}, title = {A Visualization Authoring Model for Post-WIMP Interfaces}, booktitle = {Poster Program of the 2021 IEEE VIS Conference}, year = {2021}, month = oct, location = {Virtual Event}, numpages = {2}, doi = {10.48550/arXiv.2110.14312}, url = {https://arxiv.org/abs/2110.14312} }

2019

- HCIS

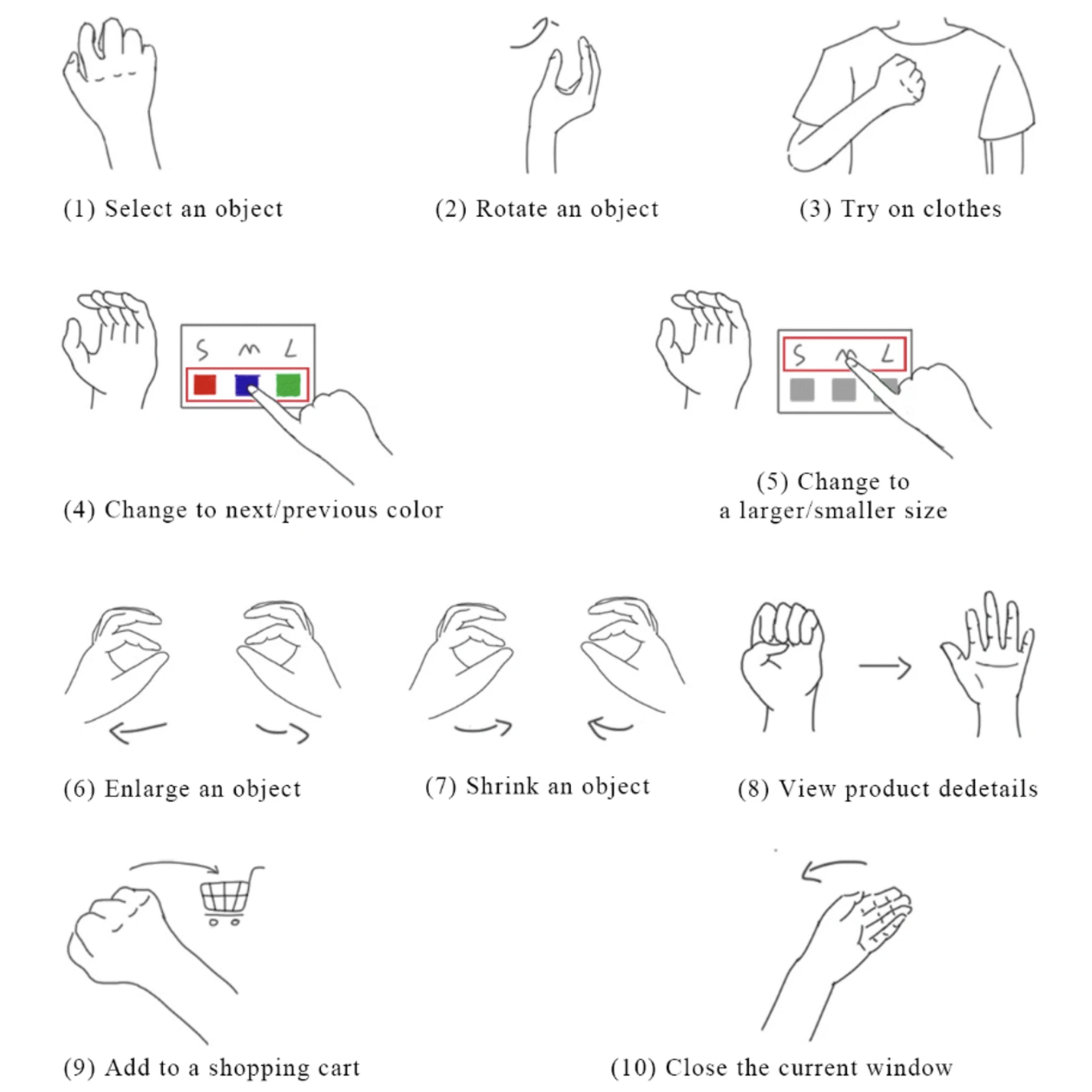

Understanding freehand gestures: a study of freehand gestural interaction for immersive VR shopping applicationsHuiyue Wu, Weizhou Luo, Neng Pan, and 4 more authorsDec 2019

Understanding freehand gestures: a study of freehand gestural interaction for immersive VR shopping applicationsHuiyue Wu, Weizhou Luo, Neng Pan, and 4 more authorsDec 2019Unlike retail stores, in which the user is forced to be physically present and active during restricted opening hours, online shops may be more convenient, functional and efficient. However, traditional online shops often have a narrow bandwidth for product visualizations and interactive techniques and lack a compelling shopping context. In this paper, we report a study on eliciting user-defined gestures for shopping tasks in an immersive VR (virtual reality) environment. We made a methodological contribution by providing a varied practice for producing more usable freehand gestures than traditional elicitation studies. Using our method, we developed a gesture taxonomy and generated a user-defined gesture set. To validate the usability of the derived gesture set, we conducted a comparative study and answered questions related to the performance, error count, user preference and effort required from end-users to use freehand gestures compared with traditional immersive VR interaction techniques, such as the virtual handle controller and ray-casting techniques. Experimental results show that the freehand-gesture-based interaction technique was rated to be the best in terms of task load, user experience, and presence without the loss of performance (i.e., speed and error count). Based on our findings, we also developed several design guidelines for gestural interaction.

@journal{wu2019understanding, author = {Wu, Huiyue and Luo, Weizhou and Pan, Neng and Nan, Shenghuan and Deng, Yanyi and Fu, Shengqian and Yang, Liuqingqing}, title = {Understanding freehand gestures: a study of freehand gestural interaction for immersive VR shopping applications}, journal = {Human-centeric Computing and Information Sciences}, volume = {9}, issue = {1}, year = {2019}, month = dec, pages = {43}, doi = {https://10.1186/s13673-019-0204-7}, publisher = {Springer} }